Challenges using Cloud Foundry and Consul

Background

Diego is one of the primary subsystems of Cloud Foundry, managing the runtime operations of applications and services. Diego is distributed in nature and, similar to many other distributed systems, requires its components to be highly available and discoverable. Diego internally used Consul to achieve goals of discoverability and availability. However, within the context of Cloud Foundry, rolling deployments of components often caused instability and downtime in the system — mostly due to failures in Consul.

After detailed examination of observed problems, Cloud Foundry chose to use alternative strategies to provide discoverability and availability wherever possible and reduce dependency on Consul. In this post, we will go over lessons learned from using Consul in Cloud Foundry, and the alternative strategies we used to help improve the robustness and reliability of Diego components and Cloud Foundry in general.

What is Consul?

Consul provides an elastic and highly available mechanism for configuring and discovering services in a distributed system. It provides discoverability and health checks for instances of an existing service and offers a key/value store that facilitates dynamic configuration, coordination, and leader election among other capabilities.

The availability and reliability that Consul offers is based on the promises of the RAFT protocol — that is, providing consistency by achieving consensus across all Consul node members participating to form a quorum. Based on the principles of RAFT, for a quorum to form, a majority of the nodes in a Consul cluster should agree on a leader. The leader, once selected, plays a critical role in ingesting data entries from the clients, replicating the entries to the rest of the cluster, and managing the final state of a given entry with respect to whether or not it should be stored and distributed to all members of the cluster. Essentially this guarantees the cluster’s state is eventually consistent.

How is Consul used in Cloud Foundry?

- The combination of service discovery capabilities, healthchecks, and a distributed key/value store to keep system state and facilitate leader election, showed promising potential for Consul to be used in a Cloud Foundry deployment. Consul is used in Cloud Foundry to serve three main purposes:

- Service Discovery

- System State Store

- Distributed Locking

Service discovery in Cloud Foundry is achieved by allowing components to register themselves with the Consul DNS and then using DNS resolution to discover and communicate with one another. The key/value store in Consul is used by Cloud Foundry components to preserve system information, such as the state of running apps and the related metadata. Finally, the key/value store in Consul is also utilized to implement a distributed locking mechanism that enables coordination among multiple instances of Cloud Foundry components that require high availability.

For these components, high availability is obtained through an active-passive replication mechanism. In Cloud Foundry’s active-passive replication mode, at any point in time, a single instance of the component takes control of the distributed lock and acts as the active master instance. The remaining components operate in a passive standby mode, waiting on the lock to be freed, e.g., in case of a master failure, for them to be able to take on the active role.

Consul in CF Diego

To provide more details on how Cloud Foundry utilizes Consul, let us take a closer look at how it is used in Diego, the new generation of the Cloud Foundry runtime. Diego is a distributed runtime that can be deployed together with other components in Cloud Foundry to schedule and run both short-lived and long running processes as part of the Cloud Foundry ecosystem.

Clients submit tasks and processes to Diego’s Bulletin Board System (BBS). Then the BBS consults with the Auctioneer component whose primary role is to find, schedule and delegate execution of tasks to a Cell Rep. The Cell Rep takes on the responsibility of executing processes by creating a container, deploying the code for the target process into the container, making the process available to the outside world by making it routable through Route-Emitters, and then managing and monitoring the execution of the process while updating the status of the process on the BBS.

Among all the components in Diego, the BBS, the Auctioneer, the Cell Reps and the Route-Emitters make heavy use of features in Consul. All components in Diego benefit from the service discovery features of Consul by registering themselves to be discovered by other components in the system. The earlier generations of the BBS used Consul for storing system state — the state of all running processes and tasks. Similarly, the Cell Reps use Consul to store their metadata information, advertise their presences and accept work (i.e., responsibility for running processes). The BBS, the Auctioneer and the Route-Emitters also utilized the distributed locking mechanisms developed on top of Consul to implement an active-passive availability mechanism for their components and to guarantee that only one instance for each of these components runs as the active instance at any given point in time.

In the rest of this post, we’ll go over how Diego aims at solving problems faced in case of Consul failures.

Challenges with Consul in Cloud Foundry

The majority of challenges with using Consul in Cloud Foundry stem from the way that BOSH handles the lifecycle of the software it monitors. BOSH is the primary tool for deploying and managing Cloud Foundry components (including Consul). BOSH is primarily designed for managing stateless services which adhere to 12 factor app principles or basic stateful services. Consul is not only a stateful service — it’s also a clustered one. Consul utilizes the RAFT consensus algorithm for its consistency guarantees, which has proven to be one of the main driving factors behind its stability problems with BOSH. This combination does not play well with the way that BOSH wants to manage software.

An important concept in a RAFT-based distributed cluster is that the cluster relies on one of its nodes to act as the leader for coordination and management of other nodes in the cluster. Even though theoretically a RAFT-based system can remain operational in the presence of more than one leader in its cluster, the cluster loses its consistency guarantees in the presence of two or more leaders. This situation is generally referred to as Split Brain. We will not go into deeper details about RAFT in this blog post; however you can find more information about RAFT here. However, it is crucial to point out that Split Brain Consul clusters make Cloud Foundry dysfunctional.

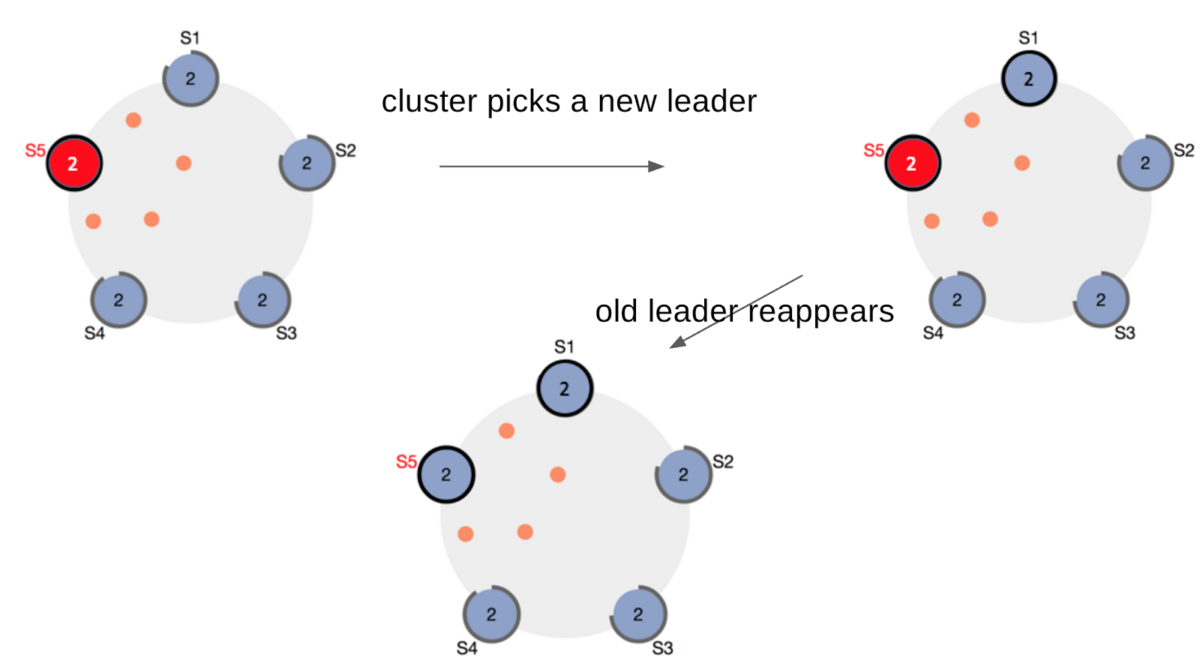

To give you an example, when BOSH does rolling deployments of the system, it may stop the Consul leader ungracefully. With unexpected termination of the leader in a Consul cluster, the remaining nodes in the Consul cluster fail to form a quorum. Alternatively, during a BOSH deploy, we have witnessed that when the leader takes longer than expected to restart, the cluster may attempt to elect a new leader. Once the initial leader is back and tries to join the cluster, its state is out of sync with the rest of the cluster which in turn puts the entire Consul cluster in a bad state, potentially causing a failure in the entire cluster. Figure below illustrates the above situation, when BOSH takes node S2. When S2 comes back up while the cluster has already selected S1 as the new leader, we run into the Split Brain situation.

Another case of failure we have noticed during a rolling deploy is when BOSH deletes the data for more than one Consul node at the same time. When the nodes restart and try to rejoin the cluster, both nodes try to bootstrap, in which case they both may end up trying to be the leader for the cluster, which in turn can cause failure of the entire cluster.

As mentioned earlier, Diego components are heavily dependent on Consul’s availability to be able to operate. This implies that Consul failures that are not easily or quickly recoverable would result in failures in Diego components. As an example, the GoRouter requires routes to be continuously refreshed by the Route-Emitter for their corresponding applications to remain routable. However, in the absence of Consul, the Route-Emitter would not refresh routes and GoRouter gradually loses freshness of routes and will start dropping routes. This results in applications registered with Diego becoming unreachable from outside the Cloud Foundry ecosystem.

Similarly, BBS instances rely on Consul to decide on the active BBS. However, reliance on Consul has created a circular dependency among BBS instances as well. Among BBSes, the master instance holding the lock in the Consul is the one to also update the DNS record so that other components can do service discovery. In the absence of the Consul, BBS instances lose track of the active instance and each individual instance may independently try to register itself with a non-existing Consul. This gets exacerbated when other components try to find and communicate with the active BBS while the DNS record is invalid and none of the passive BBS instances can update it. The combination issues with a missing master BBS instance and other components losing track of the BBS service entirely can bring the entire Diego subsystem down.

Issues like the ones described above prompted us to look for alternative approaches to try to mitigate the stability of the Diego components through rethinking the design of the system and potentially limiting the use of Consul.

In Part II of this series, we will describe how we have worked around some of the issues and resulted with a solution that increased the overall stability of the system.

Conclusion

It’s important for us to mention that the purpose of this blog post is not to devalue the great deal of effort and engineering work that has been put into developing Consul. Consul is in fact a great piece of technology that, if used in the right setting, can solve very important service discovery problems in a distributed system.

As mentioned earlier, it is primarily the challenges of using Consul, as a stateful distributed system, together with BOSH as a deployment tool that assumes no system state, that resulted in problems when using Consul in Cloud Foundry. It’s entirely possible that with different constraints, Consul would have been a correct choice for Diego and other parts of Cloud Foundry. However, the clashing constraints we listed are not ones we can easily remove, and perhaps a better approach is to rethink our use of Consul to solve the initial problems.

In a follow-up post, we go over how Cloud Foundry and its runtime system Diego will be implementing a different approach to distributed locking, state and service discovery.

This work is co-authored by Nima Kaviani (IBM), Adrian Zankich (VMware) and Michael Maximilien (IBM).