Update: This post has been updated to reflect changes to Dynamic ASG behavior as of cf-networking-release v3.10.0 and capi-release v1.131.0.

Overview

One of the most important things people can do to secure their Cloud Foundry environment is to restrict apps that run on it, from communicating freely over the network. This can prevent hacked applications from downloading malicious software to run in the container, and also limit the scope of what a compromised application might affect internally.

To accomplish this, Cloud Foundry offers Application Security Groups (ASGs). ASGs are sets of network Access Control Lists (ACLs) that Cloud Foundry will apply to traffic egressing app containers. They allow operators to permit access from applications to only the things the application needs to function properly, and deny access to anything else. Each ASG contains a set of rules to apply, based on IP, port, protocol. If an app doesn’t have an ASG rule covering a particular connection, it is blocked. For more information, take a look at Cloud Foundry’s ASG documentation.

Static ASGs Are Difficult to Manage at Scale

Organizations running Cloud Foundry have faced two large issues when implementing ASGs to secure their environments: split personas, and difficulty auditing.

Split Personas

Only administrators of a Cloud Foundry environment can manage ASGs or update how ASGs are bound to applications. Until now, those updates would not take effect in running containers until applications were restarted/restaged with a `cf restart` or `cf restage` task. As a result, enforcing the proper security posture of an app container requires two personas – the admin to update/apply the ASG, and the developer to restart the application safely.

This issue gets compounded greatly when admins make changes to default ASGs that apply to all apps on the platform. Getting every dev team to restart their app to ensure security compliance can be a large hassle, especially as the platform grows to host thousands of developers and apps.

Lack of Auditing

Operators have had a hard time auditing changes to ensure all apps were

locked down as desired. At the same time, it was difficult for developers to determine if changes to rules had been applied to their applications or if any rule changes would occur on the next restart.

Split Personas and a Lack of Auditing Led to a Lot of Pain

Let’s look at this first from the operator’s perspective:

- The security team updates foundation wide ASGs.

- The security team and operations team ask dozens of developer teams to restart their apps.

- Weeks go by and the security and operations teams are still trying to get developers to restart applications and coordinate change windows.

- With no built-in auditing, there is no easy way for the security team to know when the new ASG is in full effect.

Now let’s see a different scenario from developer’s perspective:

- The dev team updates some code, and restarts their app.

- Connections to one of the app’s dependencies start failing.

- The dev team frantically rolls back, and tries to understand what went wrong.

- The rollback runs into the same problem of failed connections to the dependency.

- Eventually the operations team is pulled into the outage, and determines that an ASG was updated months ago to remove access to that service because the only app listed as requiring the service was shut down.

- Access to the service is re-applied, and the apps get restarted.

Dynamic Updates Save the Day!

Dynamic ASGs provide a solution to these problems by continuously updating the rules of running containers based on what is defined in the Cloud Foundry API.

- Operators no longer have to coordinate with developers to restart the multitude of apps in the environment.

- Applications emit logs when each of their containers are updated with new rules, to let both operators and developers know when changes occur to the rulesets.

- Applying ASG changes across the board soon after they’re issued makes it easier to correlate those changes with any failures they may cause. Instead of spending time rolling back application changes, the ASG change is more likely to be suspect, and rolled back first.

Policy Server Works with ASGs to Enable Dynamic Updates

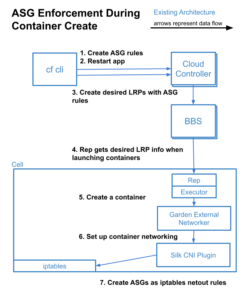

Static ASG Data Flow

Prior to implementing Dynamic ASGs, the data flow from defining an ASG to it becoming an IPTables rule on a running container looked like this:

- The `cf create-security-group` (or similar update/bind/delete) command(s) call the Cloud Controller to modify the ASG definitions.

- When applications are restarted/restaged via the `cf` CLI, Cloud Controller builds a list of rules to apply to the container when creating the container-creation event for Diego.

- Cloud Controller sends the container creation event to BBS including the egress rules determined in Step 2.

- Once diego processes determine where the container will run, the Rep process retrieves the egress rules from BBS as part of the Long-Running-Process (LRP) definition.

- Rep passes the egress rule definitions along to Garden when asking it to create the container.

- When setting up the network environment for the container, Garden calls the Garden External Networker with the egress rules.

- Garden External Networker calls the Silk CNI Plugin, and passes it the egress rules.

- Finally, the Silk CNI Plugin translates those egress rules to IPTables rules while setting up the network interface for the new container.

- The IPTables rules for a container are never touched again until that container is torn down. The only way to update the rules is to tell BBS to delete the current LRP, and define a new LRP with new rules.

Dynamic ASG Data Flow

In the initial iteration of Dynamic ASGs, the above data flow still occurs, but with a few changes, and additions. Overall, it is fairly similar to how container-to-container `network-policies` are applied:

- Policy Server ASG Syncer was added alongside Policy Server, to sync ASG data from Cloud Controller. It polls Cloud Controller to determine if any ASG data has changed since the last poll. If so, it will trigger a full sync of ASG data. Note: only changes made via the CF API v3 Endpoints will trigger a full sync. If you are using the deprecated v2 API, please update your integrations!

- On each Cell, Vxlan Policy Agent (VPA) polls Policy Server for ASG information, based on the set of spaces it finds for each app running on that VM.

- VPA reviews the current IPTables ruleset for each container based on the data retrieved from Policy Server, and re-issues IPTables rules in a non-destructive manner.

- VPA cleans up any IPTables rules created by itself or the Silk CNI Plugin that should no longer be present.

- On container creation, the rules passed into the Silk CNI Plugin are ignored, and the CNI triggers a sync with VPA for the application container being created, to get the most up to date ruleset.

Dynamic ASGs are the Default Now

As of cf-deployment v20.0.0, Dynamic ASGs are enabled by default. Simply upgrade your Cloud Foundry environment to that version, or later, and VPA will automatically start applying ASG data to live containers on a minutely basis.

In order to disable dynamic ASGs, Cloud Foundry should be re-deployed using the disable-dynamic-asgs.yml ops-file from cf-deployment.

Dynamic ASGs Have a Performance Impact

While we have designed Dynamic ASGs to be highly scalable, there are some bottlenecks in the current implementation to be aware of, dials that can be adjusted, and metrics to watch.

Cloud Controller Memory Usage

UPDATE: As of cf-networking-release v3.10.0 and capi-release v1.131.0, `policy_server_asg_syncer` polls a new endpoint to determine if ASG info has changed prior to attempting a sync. This vastly mitigates the Cloud Controller memory concerns described here.

Cause

The periodic syncs of ASG data into Policy Server exacerbates a memory leak in Cloud Controller. The rate at which this occurs depends on both the total size of the total ASG ruleset (number of ASGs and total of all the rules defined within), and frequency of polling.

Impact

There is a maximum memory limit defined in `monit` for the `cloud_controller_ng` process that triggers a restart after it reaches approximately 3.5GB of memory. Because of `api` node redundancy, there should not be an impact to the CF API as a result of this, but it is something to be aware of.

Based on our testing, we estimate that a Cloud Foundry environment with 5 `api` VMs, a 60 second polling interval, and a 2MB ASG dataset to trigger a `cloud_controller_ng` restart once every 3 days.

Metrics to Watch

- Memory usage on `cloud_controller_ng` processes

- Total dataset size of the /v3/security_groups?per_page=5000 CF API call

NOTE: if there are more than 5000 ASGs, paginate the API calls and total up the sizes of each page’s payload

Mitigation

While we iterate on the Dynamic ASGs architecture to eliminate this behavior, the two most effective ways to mitigate this if `cloud_controller_ng` restarts are causing issues are to increase the number of `api` VMs in your deployment, and/or increase the `policy-server`’s polling interval.

IPTables Rule Count

Cause

Due to the internal design of IPTables, Cells with extremely large IPTables rulesets (>150,000 rules per Cell) will experience additional load when trying to update and manage all of those rules.

Impact

Sync times between VPA and Policy Server will increase, and VPA’s CPU usage on Cells will increase. This behavior is most apparent during activities similar to rolling restarts of Cells, where there are lots of auctions happening, and changes to what apps are running on each Cell.

Based on our testing, significant performance hits will be seen at >150,000 rules per Cell. When rules per Cell exceeds 500k, there is the potential for process queue saturation to cause the Cell to become completely unresponsive.

Metrics To Watch

- `vxlan-policy-agent` sync timings:

- `asgIptablesEnforceTime` – Time taken to update iptables rules with new definitions

- `asgIptablesCleanupTime` – Time taken to clean up old rulesets

- `asgTotalPollTime` – Total time taken to sync from policy-server, apply rules, and clean-up

- `vxlan-policy-agent` cpu usage

- `netmon`’s `IPTablesRuleCount` – Number of IPTables rules on a given `diego-cell`

Mitigation

We highly recommend having fewer than 150,000 IPTables rules per Cell, by reducing the number of apps per VM. Additionally the polling-interval between VPA and Policy Server can be adjusted to keep the polling interval greater than the time it takes VPA to synchronize the rules.

We believe these to be very extreme cases, so If your environment requires more than 150,000 rules per Cell, please reach out to us with your use-case, so we can assist!

Dynamic ASGs Make Cloud Foundry Better

Based on the above points, you would recognize that there are a number of ways that Dynamic ASGs work to improve Cloud Foundry:

- Dynamic ASGs mitigate the organizational pain when managing ASGs, as environments scale. Imagine the scenarios illustrated above on a multi-tenant foundation with hundreds of tenants, each with dozens of developer teams, or a foundation with tens of thousands of applications needing a restart!

- Security posture is improved by applying changes to ASGs immediately to apps.

You can get started with Dynamic ASGs by upgrading to cf-deployment v20.2.0 or later. To refer to conceptual documentation about ASGs in, please click here. More in depth documentation on how Dynamic ASGs function is available here.