This work is co-authored by Nima Kaviani (IBM) and Amit Kumar Gupta (VMware).

Introduction

Cloud Foundry is an open source heavily distributed platform-as-a-service (PaaS) software system for managing deployment and scalability of applications in the cloud. Promises of scalability, reliability, and elasticity that come inherent to deployments on Cloud Foundry, require the underlying distributed system to implement measures to ensure robustness, resiliency, and failover. As such Cloud Foundry heavily implements practice of Software Testing into its process of software development.

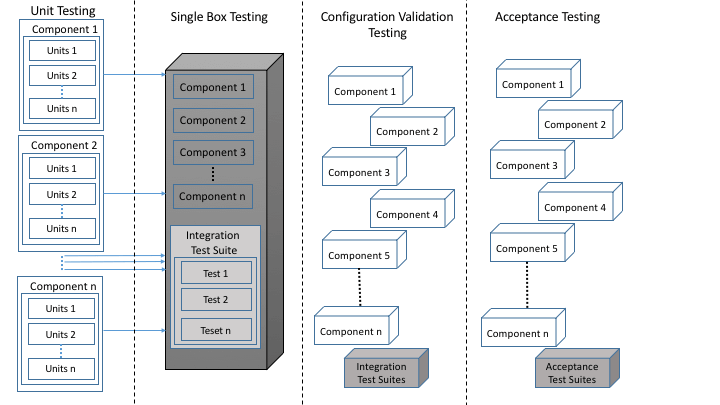

The process of software testing is long known to comprise of unit testing, integration testing, smoke testing, acceptance testing, scalability testing, performance testing, and quality of service testing. In Cloud Foundry, we advocate for the process of testing a distributed system to be done in a pattern following Figure 1:

As shown in the figure (first column), the process of testing in Cloud Foundry starts with running unit tests against the smallest points of contract in the system (i.e. method calls in each system component). Following successful execution of unit tests, integration tests are run to validate the behavior of interacting components as part of a single coherent software system (second column) running on a single box (e.g., a VM or bare metal). However, while these tests validate the overall behavior of the system as a monolith, they do not guarantee system validity in a distributed deployment.

Once integration tests pass, the next step (third column) is to validate distributed deployment of the system and run smoke tests. Configuration validation is required to ensure that software components can successfully start, resolve each other’s DNS addresses, connect and communicate. Upon successful deployment, smoke tests can run as a second suite of integration tests to check validity of overall functionality in a distributed deployment. Successful configuration of the software and execution of unit tests prepares us to validate acceptability of system behavior. This verification is done by running acceptance tests (fourth column).

The steps shown in Figure 1 enumerate the minimum required set of test suites that validate any distributed system. These sets of tests can however be extended to include scalability tests, performance tests, upgrade stability tests, etc. The pattern for executing these tests is similar to the fourth column in Figure 1, where a test suite is deployed and run in co-location with a distributed deployment of the system.

Testing Cloud Foundry

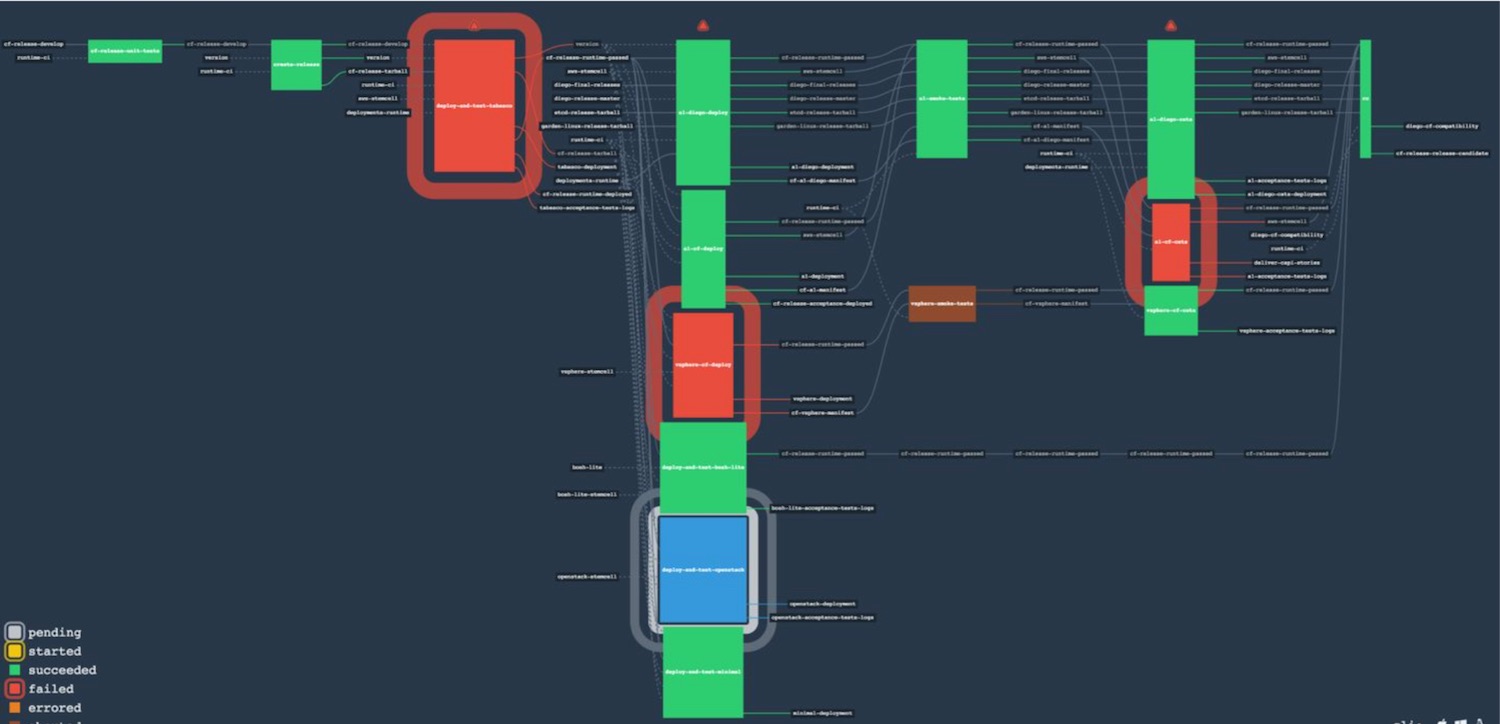

Testing in Cloud Foundry is done at multiple layers. Each component in Cloud Foundry implements their independent set of unit tests, acceptance tests, integration tests, etc. Through a continuous development and integration process, every piece of code for each individual component goes through these component-specific tests before making it to a release candidate version. Once a release is cut for a given component, the component becomes available to be consumed in the Cloud Foundry release where all the individual components are integrated and passed through a separate comprehensive set of integration tests, smoke tests, and acceptance tests prior to making it to a final release. Figure 2 shows the Concourse pipeline for testing and generating the final release for Cloud Foundry.

As shown in the picture, the pipeline for Cloud Foundry is quite complex and consists of various tiers of testing and deployment. New code travels from left to right. At the leftmost it first hits the unit tests. An important characteristic for Cloud Foundry release is that the entire system can run as a complete functional system in a Vagrant box within a development environment. This makes the initial testing super efficient in that for any new piece of code, all developers run unit tests locally and from their development machine. Only then does the code get pushed to the code repository to travel through the pipeline.

Generating the Cloud Foundry release starts by creating a single artifact that can be configured and deployed to multiple downstream test environments. This test release artifact encapsulating Cloud Foundry components is created (second column) and deployed to an Amazon AWS environment, internally referred to as Tabasco (third column). The process of distributed deployment of Cloud Foundry, to cloud environments like Tabasco, is managed by BOSH. However, BOSH is more than just a deployment tool. BOSH is a tool chain central to solving challenges of release engineering, deployment, lifecycle management, and monitoring of a distributed system. Code for each component is organized so that it can be packaged as a “BOSH release”, which is essentially just an archive of all the source code along with templates for startup scripts and configuration files following certain established conventions. The BOSH tool consumes a “deployment manifest” which declares what cloud environment to deploy to, which releases to deploy, what the deployment topology will look like in terms of networking, instance count, and cloud compute resources, etc. This encapsulation ensures that a consistent artifact is promoted from initial check-in to final Cloud Foundry release. Because BOSH is designed for managing long-running, mission-critical distributed systems, the BOSH releases which are certified together by the release integration pipeline can then be made available for consumption by end-users.

The BOSH-deployed Tabasco environment undergoes all acceptance and integration tests to make sure full functionality of the system. This makes Tabasco the heart of the pipeline for release integration, in that if tests fail at this stage, nothing proceeds. With Tabasco tests successful, Cloud Foundry undergoes a comprehensive set of pre-flight tests. Remember that Cloud Foundry is a PaaS platform, and its promise is that it can run on any IaaS cloud. For this to be validated, the Tabasco-verified release gets deployed to an AWS environment with larger number of components (A1), an OpenStack environment, a vSphere environment, and a single instance Vagrant environment (known as BOSH-lite). For each of these deployments a separate set of smoke tests and acceptance tests are executed. Once all these tests pass, Cloud Foundry is considered ready for release.

While the above suites test functionality of every new potential release after it has been configured and deployed, it is important to validate functionality of the whole system during an update process as well. To this end, the Tabasco and A1 environments have a series of applications deployed to them, referred to as canaries. The role of the canaries is to ensure the zero-downtime roll out for new releases of Cloud Foundry. The canaries are constantly pinged by a third party service that ensures their uptime as new releases of Cloud Foundry are rolled.

Challenges with Testing Cloud Foundry

Despite all the intricacies in testing a distributed system, Cloud Foundry has adopted some of the best practices in testing and delivering a highly distributed PaaS system. However, the testing model described here still has its own set of challenges with possibilities for improvement. Below, we articulate the next set of changes that are being considered in order to make the entire process more efficient.

Circular Dependencies

One of the current challenges with the way Cloud Foundry is being tested is test ownerships and how different teams should sync their development process with their release process. The challenge manifested itself when the team responsible for developing the Cloud Controller component (CAPI) had to simultaneously work on the new version (v3) of their API while maintaining the current version. Due to sharing the same set of acceptance tests with the main release of Cloud Foundry, new acceptance tests for the v3 API of Cloud Controller would would break the pipeline when running as part of the pipeline for the main release of Cloud Foundry which did not include any of the new v3 features. This happened mostly because two separate pipelines shared boundaries for features they tested with their acceptance tests. We are working on coupling acceptance tests for each team with the release they make so that a new team’s acceptance tests get integrated into the main release alongside integration of their main release.

Test Redundancies and Shared Pipelines

At the moment, different Cloud Foundry components take an exhaustive approach to running tests by running the full suite of acceptance tests for Cloud Foundry. Also, some components have tests developed and running in their pipelines that potentially should be moved to the main Cloud Foundry release pipeline. This in turn results in the entire test process and the feedback cycle being long, which is particularly problematic when more than one team relies on the same release pipeline and changes need to wait for a healthy pipeline before getting integrated. An example of this is the set of upgrade stability tests that are run in the Diego pipeline which ideally should move to the main Cloud Foundry release pipeline. Another example is with Diego sharing the same pipeline with the persistence team (PERSI). A broken pipeline can cause significant blockage for the Diego team and other teams involved.

Test Ownerships

The two issues discussed above also highlight the challenge of test ownerships. It has been proven difficult to draw fine boundaries on ownership of acceptance and integration tests. This is mostly due to the fact that, in the current model teams push their tests up to the release integration pipeline. An alternative would be for teams to have their own test packages and pull down necessary tests from any other team’s codebase to their pipeline during their internal testing. Once satisfied, they can push their tests together with their code into the release integration pipeline to be tested against the full Cloud Foundry release.

Promoting Components into a Final Release

Another challenge is how code for a component is encapsulated and promoted through different pipelines or through different stages within a pipeline. The question of which releases to combine depends on whether the pipeline in question is that of an individual component, or the final release integration pipeline. An individual component will want to combine the most recent collection of releases certified by the integration pipeline, together with the current edge release of the component itself. Once this combination is deployed and tested in the component’s own CI environment, the release for that component is a candidate for integration within the final integration pipeline. And so this answers the question for the final integration pipeline as well; it consumes the outputs releases of each individual component’s test and release pipelines.

Configuration Encapsulation of Components

A final Cloud Foundry release (in the conventional sense of the term “release”) is primarily a collection of co-certified BOSH releases encapsulating the various components. Templates and tooling for generating Cloud Foundry deployment manifest for BOSH are updated by every team contributing a component to Cloud Foundry. Because of this, problems similar to that of test ownerships discussed above can happen. For example, if one team wants to change the name of some deployment manifest property responsible for some aspect of their component’s configuration, what happens if the deployment manifests used in other component pipelines or in the final release integration pipeline are not changed accordingly? This can cause multiple pipelines to break, and slow down several teams. Configuration changes are required to be made backwards-compatible unless it’s truly impossible to do so. This also requires effective communication between teams so that pipelines can be promptly updated, allowing the original team to remove the deprecated configuration as soon as possible.

Conclusion

We discussed the overall process of testing of Cloud Foundry as a highly distributed platform as a service (PaaS) system. We also discussed a series of challenges encountered during distributed testing of Cloud Foundry. Our understanding is that these issues are not specific to Cloud Foundry and are applicable to any distributed system that requires extensive series of testing. From all the discussions above, one important point about testing a software system is that the entire process is iterative and incremental. We have worked hard to fix issues with the initial process of testing and we will continue to make the overall process efficient and flawless.

Acknowledgement: Special Thanks to Dr. Michael Maximilien (IBM) for his suggestions and comments on this blog post.